Description of predict_aspects method

Katarzyna Pękala

2021-03-10

Source:vignettes/vignette_aspect_importance_indepth.Rmd

vignette_aspect_importance_indepth.RmdIntroduction

Predict aspects function takes on the challenge of interpreting model built on highly dimensional data. There exist a number of methods for local explanation of black-box models, like Break Down, Shap or LIME. However, the problem arises when the explanatory variables are correlated.

Predict aspects’ aim is to increase the interpretability of the black box model by providing instance-level and data-level explainer for the groups of explanatory variables. It enables grouping predictors into entities called aspects. Afterwards, it can calculate the contribution of those aspects to the prediction.

Intuition

Let’s suppose that we built a model on highly dimensional data and we are using this model to predict an outcome for a new observation. We would like to gain insight which features are contributing more and which less, to this calculated prediction. However, when we calculate such importance of every single feature, the image may be still unclear, because some of those features maybe correlated. To gain better understanding, we can group those features. And afterwards, we can calculate the contribution (importance) to the prediction of every group of features (aspects). Hence the method predict aspects.

To achieve that goal, the method works in following way: it uses subset of observations from the original dataset and than it modifies it, so every observation will have at least one aspect (meaning at least one group of features) replaced by the data from the observation of interest. Then we will build linear model that will predict how those replacements change the prediction of the modified data.

Method

We start by having dataset \(\mathcal{X}\) and model \(f\) built on this dataset. We would like to explain the prediction for the observation of interest \(x_*\).

Before we can use the method, we need to group the explanatory variables into aspects. We can use two different approaches: we can built the aspect list arbitrarily by using domain expertise or we can use group_variables() function that will do the grouping for us by using variables correlations. In the second approach, we are going to get aspects where every absolute value of pair-wise correlation of explanatory variables is no smaller than a given level. It should be noted that group_variables() works only for numerical variables.

The predict_aspects function algorithm starts with sampling observations from the dataset \(\mathcal{X}\) into matrix \(A\).

Afterwards, it creates binary matrix \(X'\). The number of rows of this matrix is equal to number of sampled observations in \(A\). The number of columns is equal to the number of aspects.

In the next step, matrix \(A\) is modified into matrix \(A'\). The binary matrix \(X'\) directs how the modification will work: for given observation from \(A\), function checks in binary matrix \(X'\) which aspects should be replaced by aspects from the observation of interest \(x_*\).

In result, we obtain a modified matrix \(A'\) where for every observation at least one aspect is replaced with the data from the observation of interest.

Next, the method checks how the aspects replacement changed the prediction. In other words, it looks at the difference between predictions for modified matrix \(A'\) and matrix \(A\).

Finally, we use linear model on the binary matrix \(X'\) where the difference in predictions is the dependent variable. Model’s coefficients are the results we are looking for - the values of aspects importance.

We can interpret coefficient \(\beta_i\) as the average change in prediction caused by replacing in \(A\) the variables (grouped in aspect \(i\)) by the variables from \(x_*\).

Predict aspects algorithm:

-

\(f\) - model

-

\(\mathcal{X}\) - dataset

-

\(x_*\) - observation to be explained

-

\(\mathcal{P}\) - aspects list, \(\mathcal{P} = {q_1, ..., q_m}\), partition of set of indexes \(J = {1, ..., p}\)

- \(b\) - size of sample

\(A\) = \([a_i^j]_{b \times p}\) = select_sample(\(\mathcal{X}\), \(b\))

sample (with replacement) B rows from \(\mathcal{X}\)\(X'\) = \([{x'}_i^k]_{b \times m}\) = sample_aspects(\(m\), \(b\))

sample binary matrix of size \(b \times m\)\(A'\) = \([{a'} _i^j]_{b\times p}\) = replace_aspects(\(A\), \(X'\))

\([{a'}_i^j] = [a_i^j]\), if \([{x'}_i^k] = 0\) where \(j \in q_k\)

\([{a'}_i^j] = x_{*j}\), if \([{x'}_i^k] = 1\) where \(j \in q_k\)\(Y_m = f(A') - f(A)\)

fit linear model \(g\), \(g(X') = Y_m\)

return coefficients of \(g\)

Example

To illustrate how the method works, we will use Boston Housing dataset from mlbench package. This well known dataset contains housing data for 506 census tracts of Boston. We will be predicting cmedv - corrected median value of owner-occupied homes (in USD 1000’s).

We are going to build two models: linear regression model and random forest. Those models will be built only on numerical variables. Then we will check the predictions for the observation called new_observation.

library(triplot)

library(mlbench)

library(randomForest)

library(DALEX)

data("BostonHousing2")

Boston <- BostonHousing2[,-c(1:5, 10)]

Boston_no_target <- BostonHousing2[,-c(1:6, 10)]

new_observation <- Boston_no_target[4,]

Boston_lm <- lm(cmedv ~., data = Boston)

Boston_rf <- randomForest(cmedv ~ ., data = Boston)

predict(Boston_lm, new_observation)#> 4

#> 28.78676

predict(Boston_rf, new_observation)#> 4

#> 33.97821As we observe that those two models’ predictions for the new_observation are different, we would like to understand which groups of the observation’s features contribute to this differences. For that, we will use aspects_importance().

In the beginning, we have to build an aspect list. Then we will call aspects_importance() for both of those models to check:

- which aspects have the biggest contribution to the prediction in each case,

- what is minimal value of pairwise absolute correlation in each group,

- whether any aspect contains negatively correlated pair of features (

neg).

Finally, we will plot aspects_importance() results for both models.

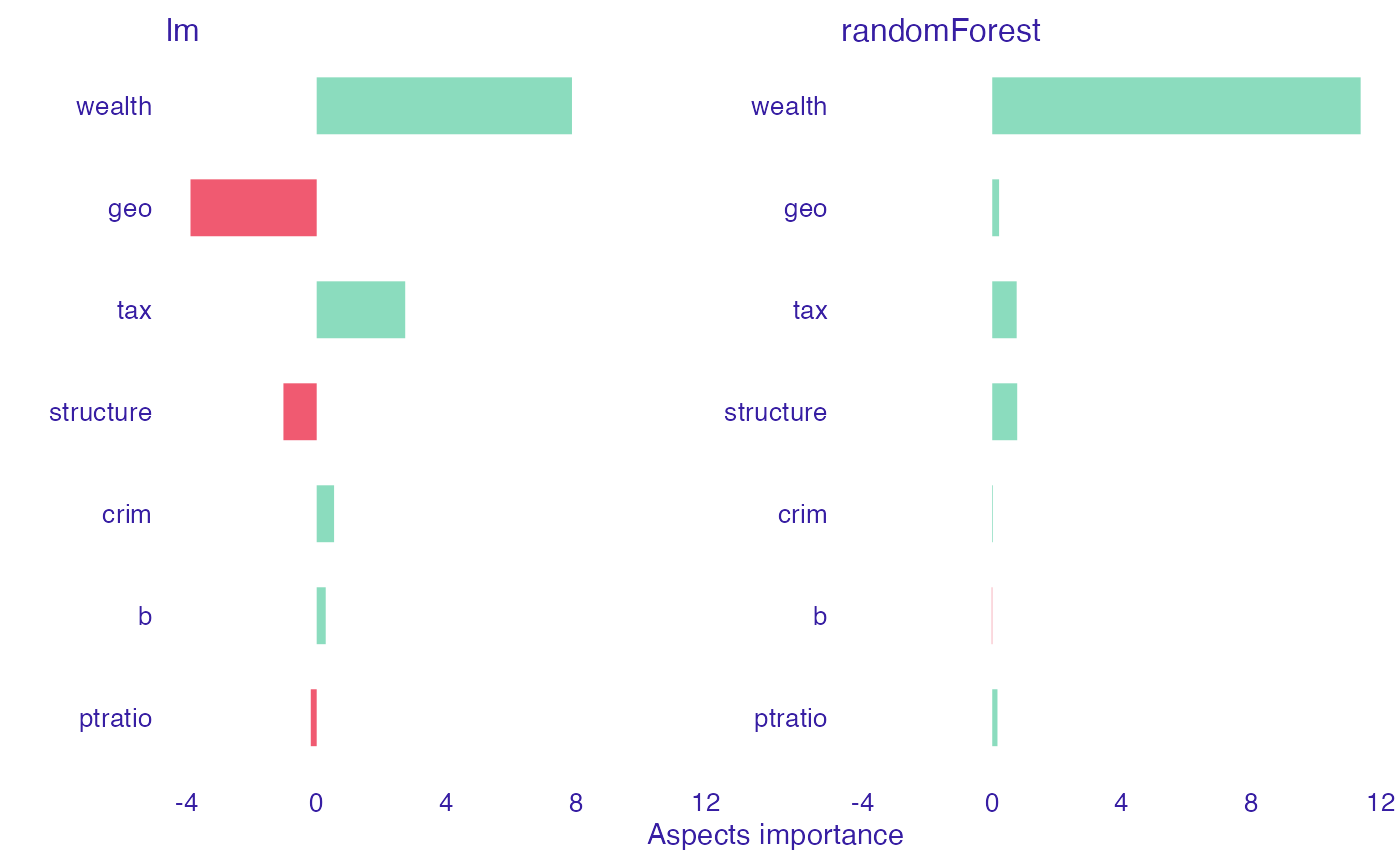

We can notice that in random forest model, almost every group of features (except b and crim) have positive influence on the prediction. Wealth (rm and lstat) has the most important contribution, it’s significantly bigger than the rest. On the other hand, in linear model we can observe that some features have negative impact (geo, structure, ptartio). Nonetheless, positive contribution of wealth is still the most important, as in random forest model. After seeing this results, we can now understand why the prediction in linear model case is smaller than in random forest one.

set.seed(123)

Boston_aspects_m <- list(geo = c("dis", "nox", "rad"),

wealth = c("rm", "lstat"),

structure = c("indus", "age", "zn"),

ptratio = "ptratio",

b = "b",

tax = "tax",

crim = "crim")

explain_Boston_lm <- explain(Boston_lm,

Boston_no_target,

verbose = FALSE)

explain_Boston_rf <- explain(Boston_rf,

Boston_no_target,

verbose = FALSE)

Boston_ai_lm <- predict_aspects(x = explain_Boston_lm,

new_observation = new_observation,

variable_groups = Boston_aspects_m,

N = 5000,

show_cor = TRUE,

label = "LM")

Boston_ai_rf <- predict_aspects(x = explain_Boston_rf,

new_observation = new_observation,

variable_groups = Boston_aspects_m,

N = 5000,

show_cor = TRUE,

label = "RF")

print(Boston_ai_lm, show_features = TRUE)#> variable_groups importance features

#> 3 wealth 7.8766 rm, lstat

#> 2 geo -3.8938 dis, nox, rad

#> 7 tax 2.7309 tax

#> 4 structure -1.0264 indus, age, zn

#> 8 crim 0.5362 crim

#> 6 b 0.2783 b

#> 5 ptratio -0.1824 ptratio

print(Boston_ai_rf, show_features = TRUE)#> variable_groups importance features

#> 3 wealth 11.36815 rm, lstat

#> 4 structure 0.77057 indus, age, zn

#> 7 tax 0.75401 tax

#> 2 geo 0.21135 dis, nox, rad

#> 5 ptratio 0.16134 ptratio

#> 8 crim 0.02273 crim

#> 6 b -0.01364 b

plot(Boston_ai_lm, Boston_ai_rf)

In some cases, manually grouping features into aspects may present some challenges. For that reason, we added function that can do that for us. Function group_variables() groups correlated features into aspects, given a correlation cut-off level.

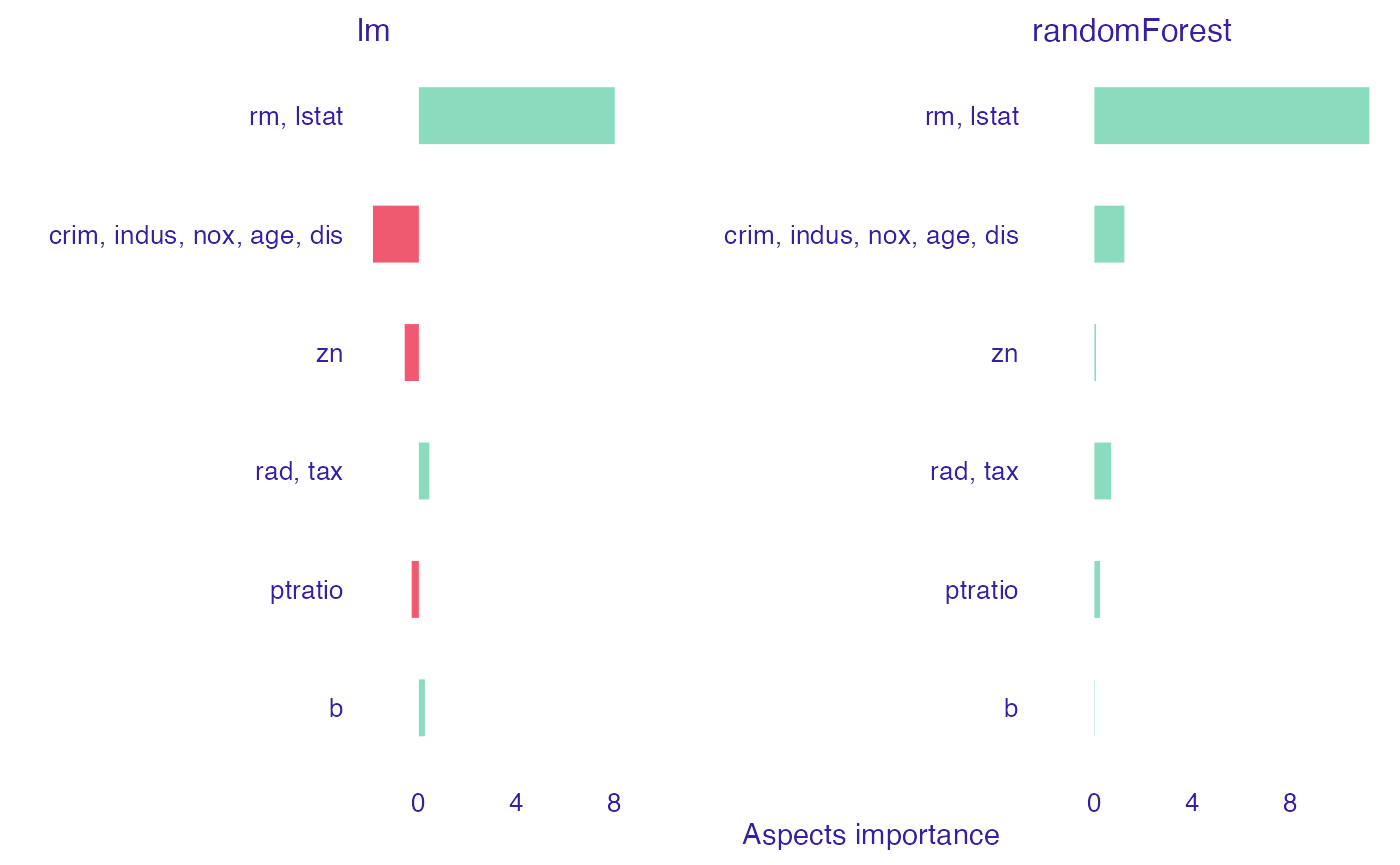

Below we will use group_variables() function with a cut off level set on 0.6. As a result, we get a list of variables groups where absolute value of features’ pairwise correlation is at least at 0.6. Afterwards, we call aspects_importance() function again.

Since group_variables() built different list that us, we can observe a little bit different results. However, the final image still allow us to see that what we established as geographical and structure features, in general, have negative contribution on the prediction in linear case, while positive in the random forest one.

set.seed(123)

Boston_aspects_a <- group_variables(Boston_no_target, 0.6)

Boston_ai_lm_2 <- predict_aspects(x = explain_Boston_lm,

new_observation = new_observation,

variable_groups = Boston_aspects_a,

N = 10000,

show_cor = TRUE,

label = "LM")

Boston_ai_rf_2 <- predict_aspects(x = explain_Boston_rf,

new_observation = new_observation,

variable_groups = Boston_aspects_a,

N = 10000,

show_cor = TRUE,

label = "RF")

print(Boston_ai_lm_2, show_features = TRUE)#> variable_groups importance features

#> 4 aspect.group3 8.0082 rm, lstat

#> 2 aspect.group1 -1.8763 crim, indus, nox, age, dis

#> 3 aspect.group2 -0.5785 zn

#> 5 aspect.group4 0.4270 rad, tax

#> 6 aspect.group5 -0.2934 ptratio

#> 7 aspect.group6 0.2445 b

print(Boston_ai_rf_2, show_features = TRUE)#> variable_groups importance features

#> 4 aspect.group3 11.24083 rm, lstat

#> 2 aspect.group1 1.22387 crim, indus, nox, age, dis

#> 5 aspect.group4 0.68666 rad, tax

#> 6 aspect.group5 0.22883 ptratio

#> 3 aspect.group2 0.06660 zn

#> 7 aspect.group6 0.01682 b

plot(Boston_ai_lm_2, Boston_ai_rf_2, show_features = FALSE)

Hierarchical aspects importance

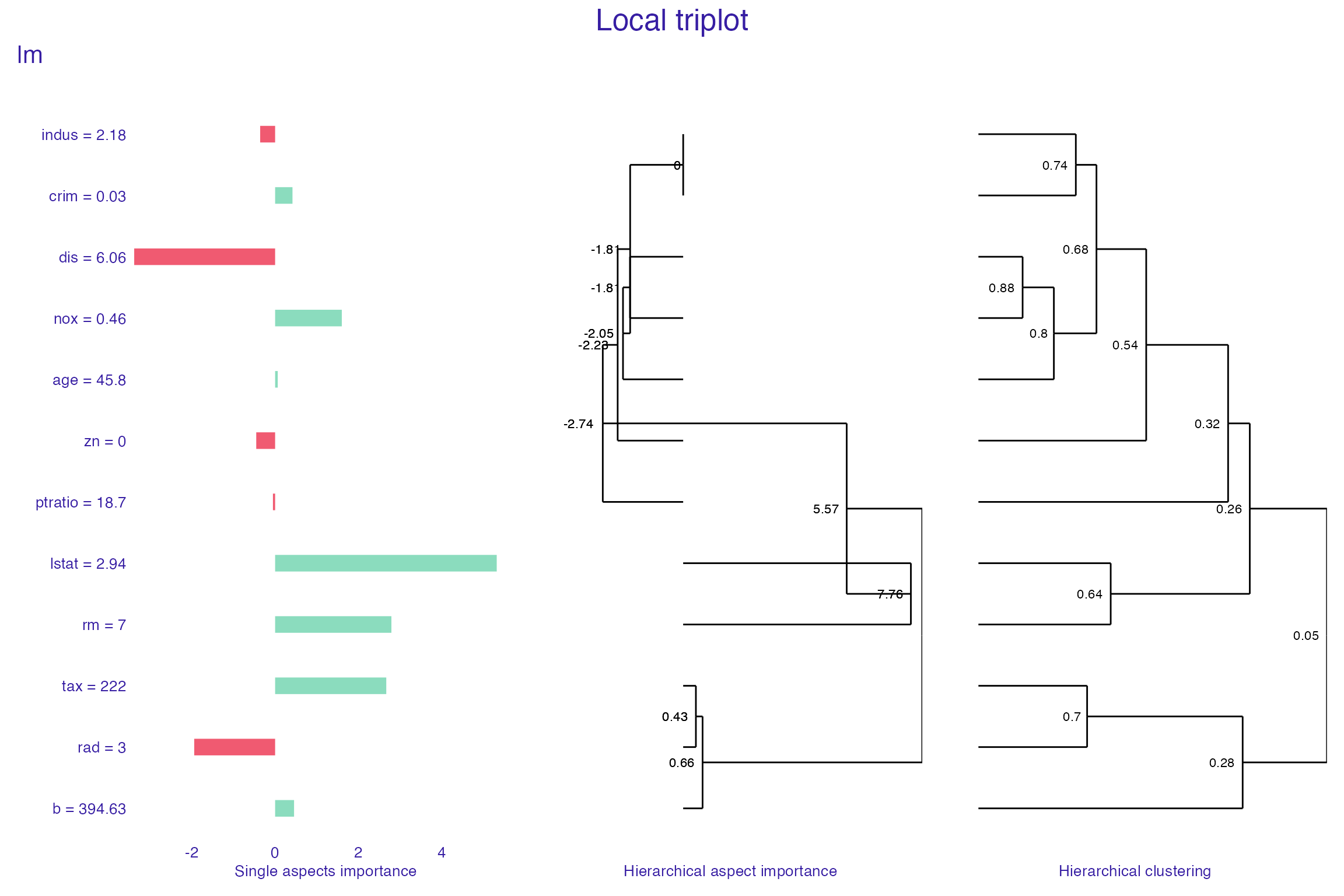

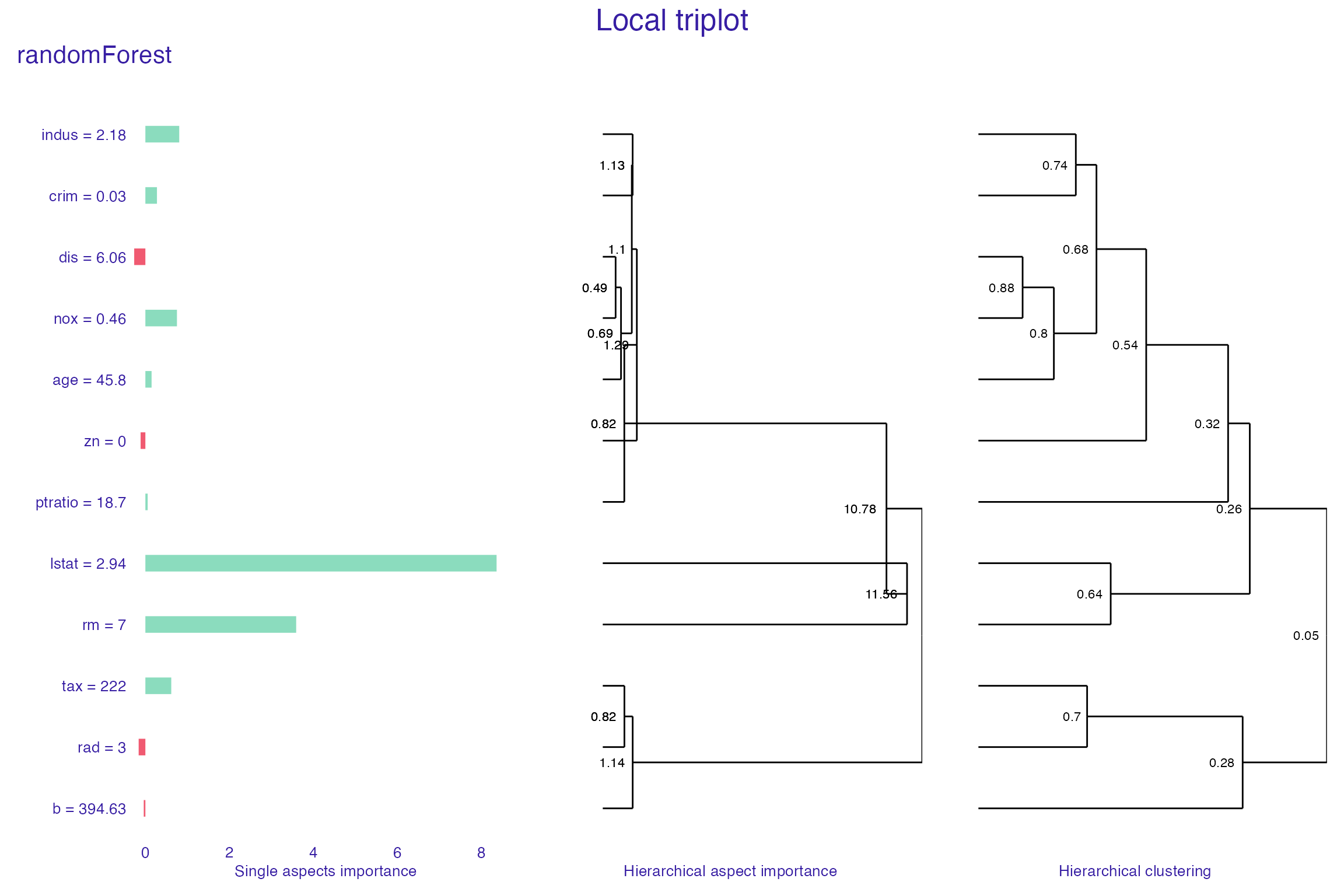

Triplot is a tool built on aspects_importance function, that allows us to go one step further in our understanding of the inner workings of a black box model.

It illustrates, in one place:

- the importance of every single feature,

- hierarchical aspects importance (explained below),

- order of grouping features into aspects in

group_variables().

Hierarchical aspects importance allows us to check the values of aspects importance for the different levels of variables grouping. Method starts with looking at the aspect importance where every aspect has one, single variable. Afterwards, it iteratively creates bigger aspects by merging the ones with the highest level of absolute correlation into one aspect and calculating it’s contribution to the prediction.

It should be noted that similarly to group_variables(), calculate_triplot() works for the datasets with only numerical variables.

set.seed(123)

tri_lm <- predict_triplot(x = explain_Boston_lm,

new_observation = new_observation,

N = 10000)

plot(tri_lm,

margin_mid = 0.2,

show_model_label = TRUE)

tri_rf <- predict_triplot(x = explain_Boston_rf,

new_observation = new_observation,

N = 10000)

plot(tri_rf,

margin_mid = 0.2,

add_last_group = TRUE,

show_model_label = TRUE)

Lasso

Behind the scenes, aspect importance numbers are really linear model coefficients. That allowed us to add one more tool to control the method result. By using lasso regression, we can control how many nonzero coefficients (nonzero aspects importance values) are present in the final explanation. To use predict_aspects() with lasso, we have to provide n_var parameter, which declares how many aspects importance values we would like to get in predict_aspects() results.

Suppose that in our last example (random forest model with aspect list build by group_variables), we would like to calculate the importance of variables’ aspects, while controlling that three of them should be equal to 0. We will call predict_aspects() on this model, with n_var parameter set to 3.

In result, we get what we expected - three nonzero aspect importance values. We can observe that there exists significant difference between the level of contribution of aspect containing rm and lstat features and the other two groups.

set.seed(123)

ai_Boston_rf <- predict_aspects(x = explain_Boston_rf,

new_observation = new_observation,

variable_groups = Boston_aspects_a,

N = 10000,

n_var = 3)

print(ai_Boston_rf, show_features = TRUE, show_cor = TRUE)#> variable_groups importance features min_cor sign

#> 4 aspect.group3 10.6090 rm, lstat 0.6408316 neg

#> 2 aspect.group1 0.3601 crim, indus, nox, age, dis 0.6794867 neg

#> 3 aspect.group2 0.0000 zn NA

#> 5 aspect.group4 0.0000 rad, tax 0.7048757 pos

#> 6 aspect.group5 0.0000 ptratio NA

#> 7 aspect.group6 0.0000 b NASummary

Predict aspects allow us to check how the level of prediction is influenced by the different groups of features. We added additional tools to this method that let us group features automatically, control how many nonzero values are in the method results or see how aspect importance values are changing while aspects are one by one increasing in size (hierarchical aspects importance).

However, we still see place for experimenting with stability of the results (size of the subset used by the predict_aspects), clarity of triplot and with lasso regression as the method extensions.

Session info

#> R version 4.0.4 (2021-02-15)

#> Platform: x86_64-apple-darwin17.0 (64-bit)

#> Running under: macOS Catalina 10.15.7

#>

#> Matrix products: default

#> BLAS: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRblas.dylib

#> LAPACK: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRlapack.dylib

#>

#> locale:

#> [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

#>

#> attached base packages:

#> [1] stats graphics grDevices utils datasets methods base

#>

#> other attached packages:

#> [1] DALEX_2.1.1 randomForest_4.6-14 mlbench_2.1-3

#> [4] triplot_1.3.1

#>

#> loaded via a namespace (and not attached):

#> [1] shape_1.4.5 xfun_0.21 splines_4.0.4 lattice_0.20-41

#> [5] colorspace_2.0-0 vctrs_0.3.6 htmltools_0.5.1.1 yaml_2.2.1

#> [9] utf8_1.1.4 survival_3.2-7 rlang_0.4.10 pkgdown_1.6.1.9000

#> [13] pillar_1.5.1 glue_1.4.2 foreach_1.5.1 lifecycle_1.0.0

#> [17] stringr_1.4.0 munsell_0.5.0 gtable_0.3.0 ragg_1.1.1

#> [21] codetools_0.2-18 memoise_2.0.0 evaluate_0.14 labeling_0.4.2

#> [25] knitr_1.31 fastmap_1.1.0 fansi_0.4.2 highr_0.8

#> [29] scales_1.1.1 cachem_1.0.4 desc_1.3.0 farver_2.1.0

#> [33] systemfonts_1.0.1 fs_1.5.0 textshaping_0.3.1 ggplot2_3.3.3

#> [37] digest_0.6.27 stringi_1.5.3 grid_4.0.4 rprojroot_2.0.2

#> [41] tools_4.0.4 magrittr_2.0.1 glmnet_4.1-1 patchwork_1.1.1

#> [45] tibble_3.1.0 ggdendro_0.1.22 crayon_1.4.1 pkgconfig_2.0.3

#> [49] ellipsis_0.3.1 MASS_7.3-53 Matrix_1.3-2 rmarkdown_2.7

#> [53] iterators_1.0.13 R6_2.5.0 compiler_4.0.4