factorMerger: a set of tools to support results from post-hoc testing

Agnieszka Sitko

2018-01-11

Introduction

The aim of factorMerger is to provide a set of tools to support results from post hoc comparisons. Post hoc testing is an analysis performed after running ANOVA to examine differences between group means (of some response numeric variable) for each pair of groups (groups are defined by a factor variable).

This project arose from the need to create a method of post hoc testing which gives the hierarchical interpretation of relations between groups means. Thereby, for a given significance level we may divide groups into non-overlapping clusters.

Algorithm inputs

In the current version the factorMerger package supports parametric models:

- one-dimensional Gaussian (with the argument

family = "gaussian"), - multi dimensional Gaussian (with the argument

family = "gaussian"), - binomial (with the argument

family = "binomial"), - survival (with the argument

family = "survival").

There are four algorithms available: adaptive, fast-adaptive, fixed and fast-fixed (they are set with the method argument of mergeFactors). Fast algorithms enable to unite only those groups whose group statistics (i.e. means in the Gaussian case) are close.

Generating samples

To visualize functionalities of factorMerger we use samples or real data examples with response variable whose distribution follow one of the listed above. The corresponding factor variable is sampled uniformly from a finite set of a size \(k\).

To do so, we may use function generateSample or generateMultivariateSample.

library(factorMerger)

library(knitr)

library(dplyr)

randSample <- generateMultivariateSample(N = 100, k = 10, d = 3)Merging factors

mergeFactors is a function that performs hierarchical post hoc testing. As arguments it takes:

- matrix/data.frame/vector with numeric response,

- factor vector defining groups.

By default (with argument abbreviate = TRUE) factor levels are abbreviated and surrounded with brackets.

Multi-dimensional Gaussian model

Computations

fmAll <- mergeFactors(response = randSample$response, factor = randSample$factor)mergeFactors outputs with information about the ‘merging history’.

mergingHistory(fmAll, showStats = TRUE) %>%

kable()| groupA | groupB | model | pvalVsFull | pvalVsPrevious | |

|---|---|---|---|---|---|

| 0 | -395.3935 | 1.0000 | 1.0000 | ||

| 1 | (I) | (D) | -395.5941 | 0.9494 | 0.9494 |

| 2 | (J) | (B) | -395.8266 | 0.9926 | 0.9369 |

| 3 | (G) | (J)(B) | -396.2185 | 0.9971 | 0.8710 |

| 4 | (I)(D) | (F) | -397.0410 | 0.9955 | 0.6811 |

| 5 | (A) | (E) | -398.3216 | 0.9884 | 0.4994 |

| 6 | (H) | (A)(E) | -399.6191 | 0.9817 | 0.4888 |

| 7 | (C) | (H)(A)(E) | -400.8005 | 0.9788 | 0.5255 |

| 8 | (I)(D)(F) | (G)(J)(B) | -404.3610 | 0.8676 | 0.0785 |

| 9 | (C)(H)(A)(E) | (I)(D)(F)(G)(J)(B) | -411.4308 | 0.3300 | 0.0034 |

Each row of the above frame describes one step of the merging algorithm. First two columns specify which groups were merged in the iteration, columns model and GIC gather loglikelihood and Generalized Information Criterion for the model after merging. Last two columns are p-values for the Likelihood Ratio Test – against the full model (pvalVsFull) and against the previous one (pvalVsPrevious).

If we choose a fast version of merging one dimensional response is fitted using isoMDS{MASS}. Next, in each step only groups whose means are closed are compared.

fm <- mergeFactors(response = randSample$response, factor = randSample$factor,

method = "fast-fixed")

mergingHistory(fm, showStats = TRUE) %>%

kable()| groupA | groupB | model | pvalVsFull | pvalVsPrevious | |

|---|---|---|---|---|---|

| 0 | -395.3935 | 1.0000 | 1.0000 | ||

| 1 | (I) | (D) | -395.5941 | 0.9494 | 0.9494 |

| 2 | (J) | (B) | -395.8266 | 0.9926 | 0.9369 |

| 3 | (G) | (J)(B) | -396.2185 | 0.9971 | 0.8710 |

| 4 | (I)(D) | (F) | -397.0410 | 0.9955 | 0.6811 |

| 5 | (A) | (E) | -398.3216 | 0.9884 | 0.4994 |

| 6 | (H) | (A)(E) | -399.6191 | 0.9817 | 0.4888 |

| 7 | (C) | (H)(A)(E) | -400.8005 | 0.9788 | 0.5255 |

| 8 | (I)(D)(F) | (G)(J)(B) | -404.3610 | 0.8676 | 0.0785 |

| 9 | (C)(H)(A)(E) | (I)(D)(F)(G)(J)(B) | -411.4308 | 0.3300 | 0.0034 |

Final clusters

Algorithms implemented in the factorMerger package enable to create unequivocal partition of a factor. Below we present how to extract the partition from the mergeFactor output.

- predict new labels for observations

cutTree(fm)

#> [1] (G)(J)(B) (G)(J)(B) (I)(D)(F) (H) (C) (G)(J)(B) (G)(J)(B)

#> [8] (G)(J)(B) (A) (G)(J)(B) (G)(J)(B) (A) (E) (I)(D)(F)

#> [15] (I)(D)(F) (A) (H) (E) (G)(J)(B) (G)(J)(B) (A)

#> [22] (I)(D)(F) (E) (I)(D)(F) (I)(D)(F) (G)(J)(B) (E) (I)(D)(F)

#> [29] (E) (H) (I)(D)(F) (I)(D)(F) (E) (G)(J)(B) (I)(D)(F)

#> [36] (I)(D)(F) (I)(D)(F) (G)(J)(B) (A) (E) (I)(D)(F) (I)(D)(F)

#> [43] (E) (I)(D)(F) (I)(D)(F) (G)(J)(B) (I)(D)(F) (I)(D)(F) (A)

#> [50] (G)(J)(B) (E) (I)(D)(F) (E) (I)(D)(F) (I)(D)(F) (I)(D)(F)

#> [57] (A) (E) (C) (I)(D)(F) (G)(J)(B) (A) (G)(J)(B)

#> [64] (G)(J)(B) (H) (H) (I)(D)(F) (I)(D)(F) (C) (I)(D)(F)

#> [71] (I)(D)(F) (G)(J)(B) (I)(D)(F) (I)(D)(F) (G)(J)(B) (I)(D)(F) (A)

#> [78] (A) (I)(D)(F) (I)(D)(F) (H) (I)(D)(F) (G)(J)(B) (G)(J)(B)

#> [85] (C) (H) (A) (E) (I)(D)(F) (C) (I)(D)(F)

#> [92] (G)(J)(B) (G)(J)(B) (I)(D)(F) (G)(J)(B) (G)(J)(B) (H) (G)(J)(B)

#> [99] (I)(D)(F) (I)(D)(F)

#> Levels: (C) (H) (A) (E) (I)(D)(F) (G)(J)(B)By default, cutTree returns a factor split for the optimal GIC (with penalty = 2) model. However, we can specify different metrics (stat = c("loglikelihood", "p-value", "GIC") we would like to use in cutting. If loglikelihood or p-value is chosen an exact threshold must be given as a value parameter. Then cutTree returns factor for the smallest model whose statistic is higher than the threshold. If we choose GIC then value is interpreted as GIC penalty.

mH <- mergingHistory(fm, T)

thres <- mH$model[nrow(mH) / 2]

cutTree(fm, stat = "loglikelihood", value = thres)

#> [1] (G)(J)(B) (G)(J)(B) (I)(D)(F) (H) (C) (G)(J)(B) (G)(J)(B)

#> [8] (G)(J)(B) (A) (G)(J)(B) (G)(J)(B) (A) (E) (I)(D)(F)

#> [15] (I)(D)(F) (A) (H) (E) (G)(J)(B) (G)(J)(B) (A)

#> [22] (I)(D)(F) (E) (I)(D)(F) (I)(D)(F) (G)(J)(B) (E) (I)(D)(F)

#> [29] (E) (H) (I)(D)(F) (I)(D)(F) (E) (G)(J)(B) (I)(D)(F)

#> [36] (I)(D)(F) (I)(D)(F) (G)(J)(B) (A) (E) (I)(D)(F) (I)(D)(F)

#> [43] (E) (I)(D)(F) (I)(D)(F) (G)(J)(B) (I)(D)(F) (I)(D)(F) (A)

#> [50] (G)(J)(B) (E) (I)(D)(F) (E) (I)(D)(F) (I)(D)(F) (I)(D)(F)

#> [57] (A) (E) (C) (I)(D)(F) (G)(J)(B) (A) (G)(J)(B)

#> [64] (G)(J)(B) (H) (H) (I)(D)(F) (I)(D)(F) (C) (I)(D)(F)

#> [71] (I)(D)(F) (G)(J)(B) (I)(D)(F) (I)(D)(F) (G)(J)(B) (I)(D)(F) (A)

#> [78] (A) (I)(D)(F) (I)(D)(F) (H) (I)(D)(F) (G)(J)(B) (G)(J)(B)

#> [85] (C) (H) (A) (E) (I)(D)(F) (C) (I)(D)(F)

#> [92] (G)(J)(B) (G)(J)(B) (I)(D)(F) (G)(J)(B) (G)(J)(B) (H) (G)(J)(B)

#> [99] (I)(D)(F) (I)(D)(F)

#> Levels: (C) (H) (A) (E) (I)(D)(F) (G)(J)(B)In this example data partition is created for the last model from the merging path whose loglikelihood is greater than -397.041.

- get final clusters and clusters dictionary

getOptimalPartition(fm)

#> [1] "(C)" "(H)" "(A)" "(E)" "(I)(D)(F)" "(G)(J)(B)"Function getOptimalPartition returns a vector with the final cluster names from the factorMerger object.

getOptimalPartitionDf(fm)

#> orig pred

#> 1 (G) (G)(J)(B)

#> 2 (J) (G)(J)(B)

#> 3 (I) (I)(D)(F)

#> 4 (H) (H)

#> 5 (C) (C)

#> 9 (A) (A)

#> 11 (B) (G)(J)(B)

#> 13 (E) (E)

#> 14 (D) (I)(D)(F)

#> 35 (F) (I)(D)(F)Function getOptimalPartitionDf returns a dictionary in a data frame format. Each row gives an original label of a factor level and its new (cluster) label.

Similarly to cutTree, functions getOptimalPartition and getOptimalPartitionDf take arguments stat and threshold.

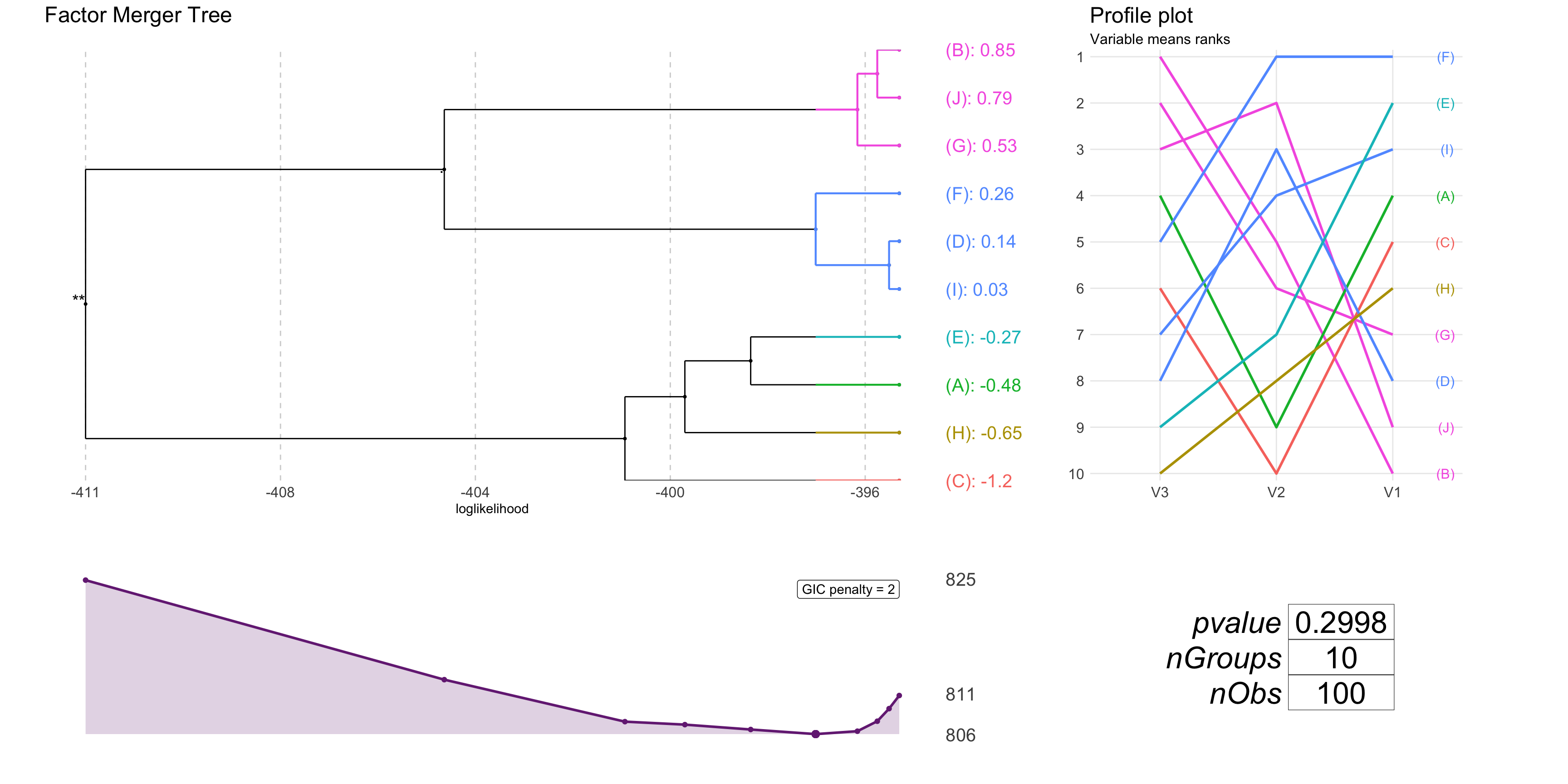

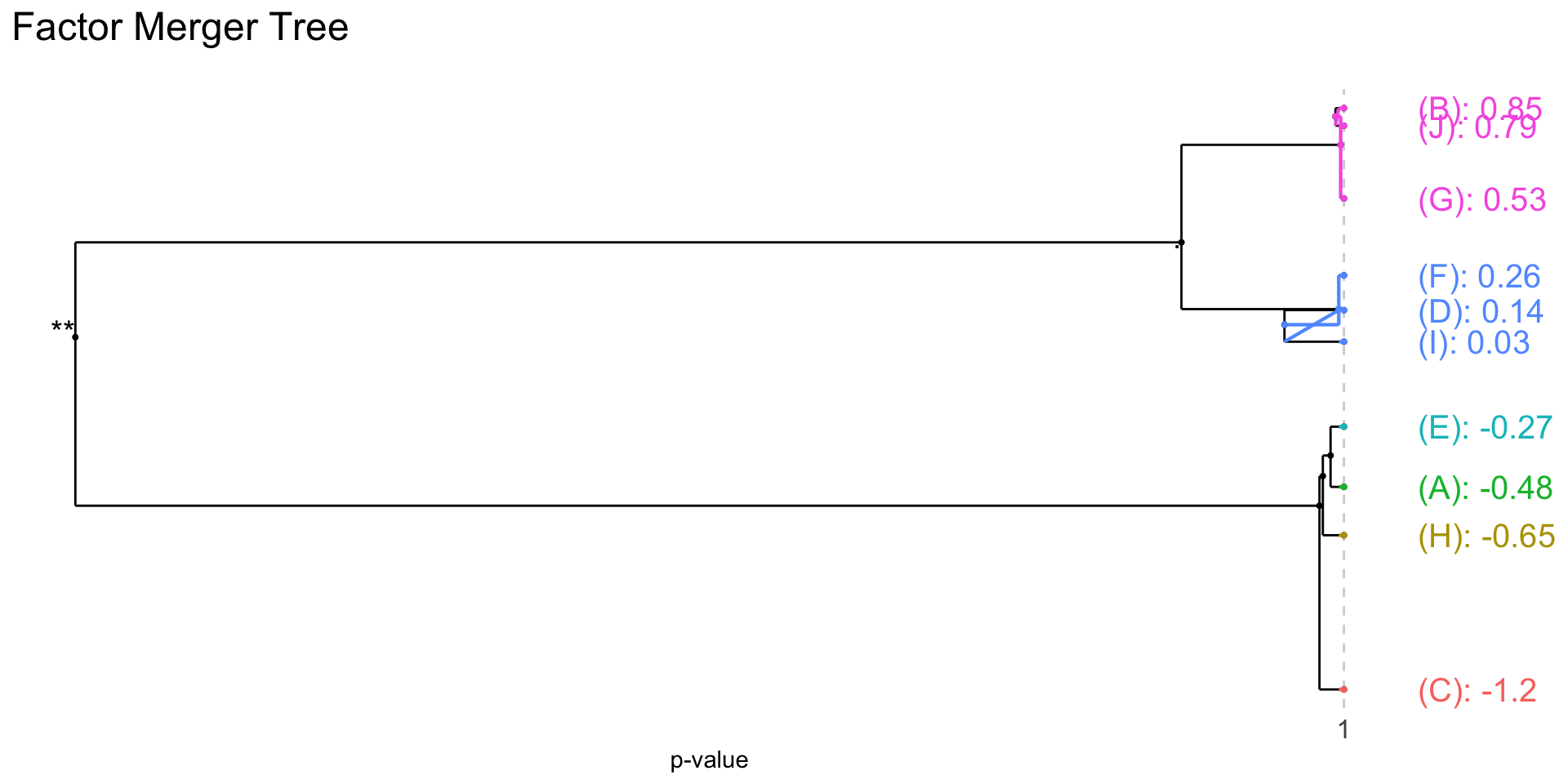

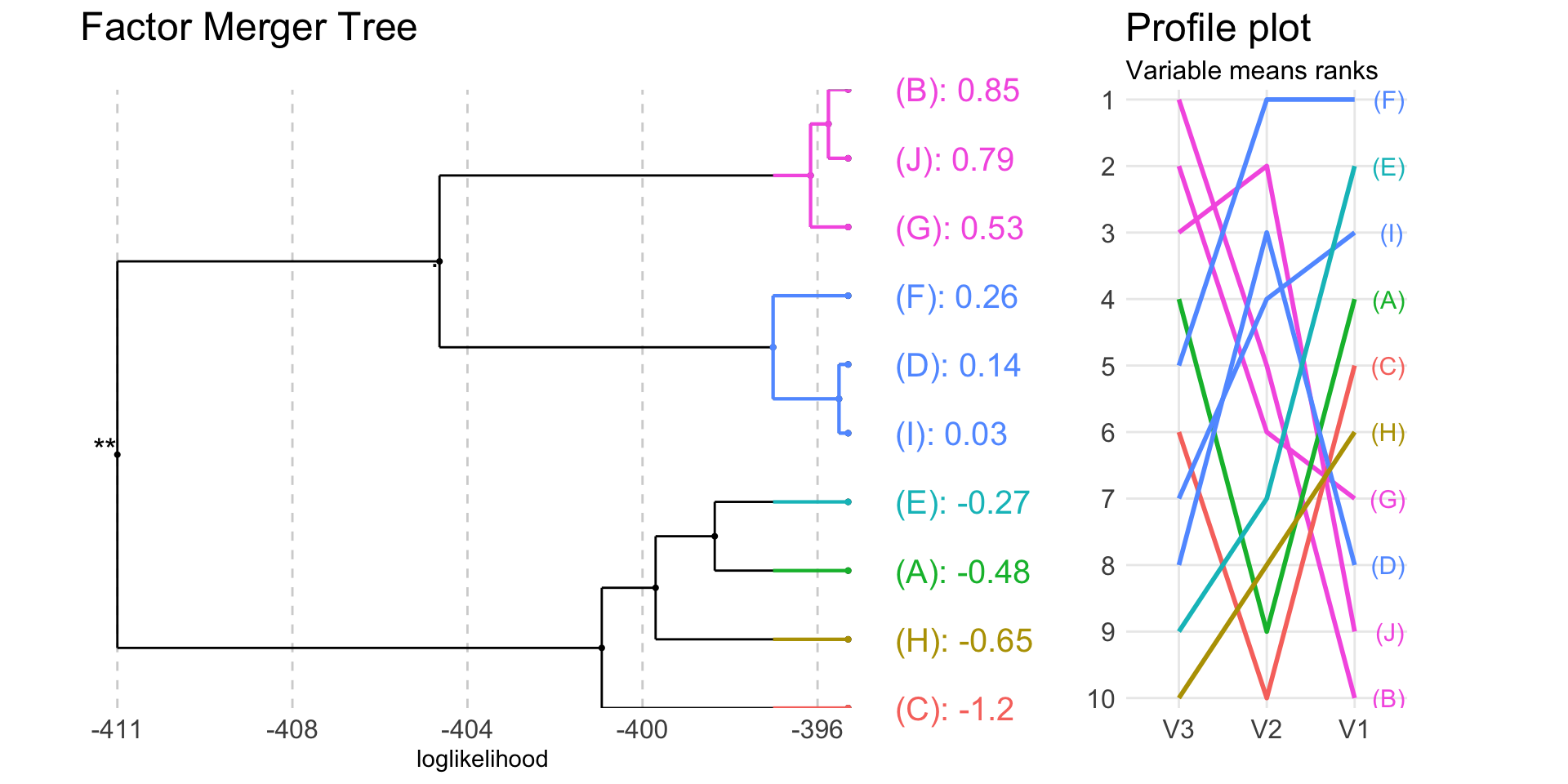

Visualizations

We may plot results using function plot.

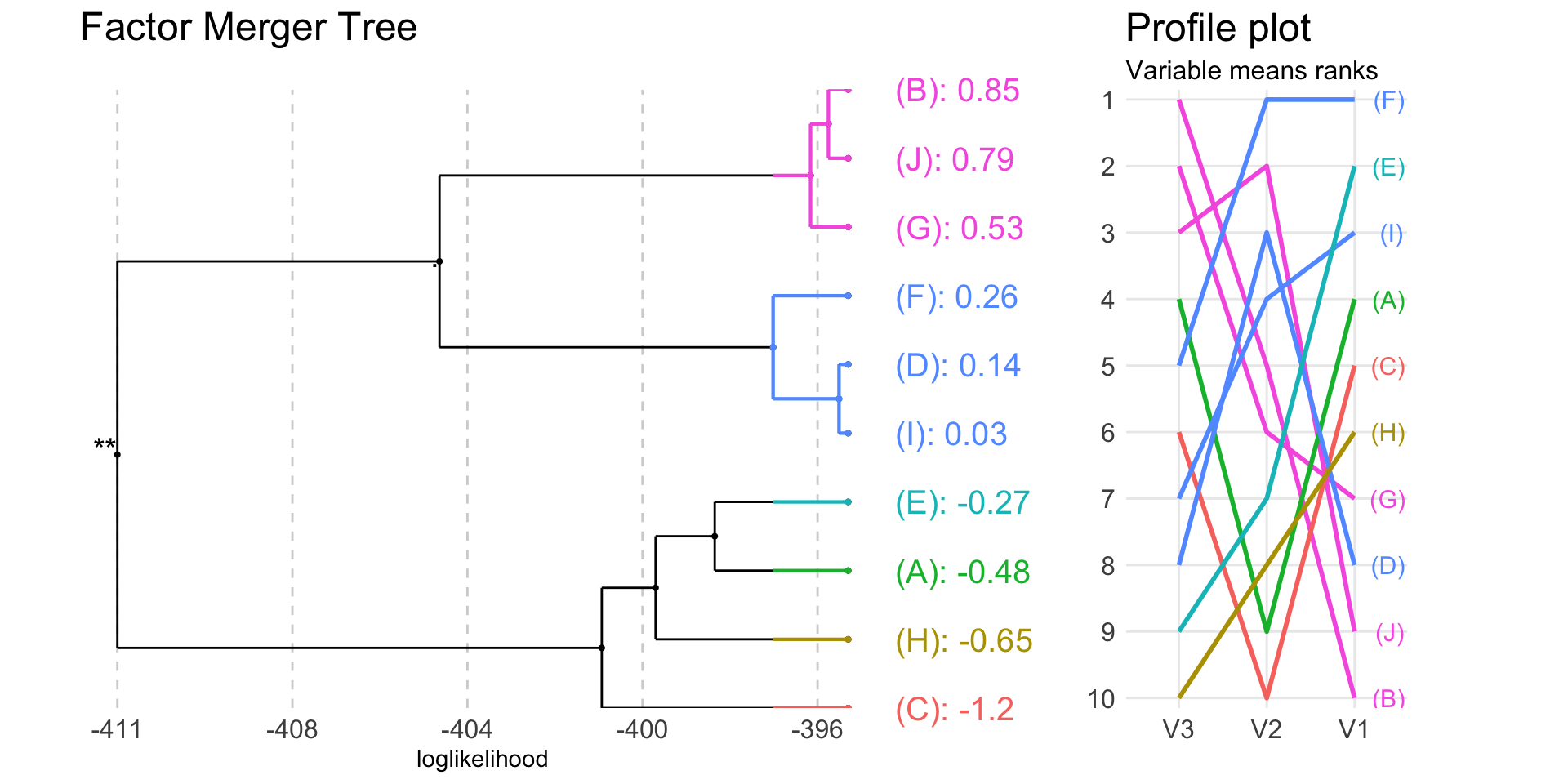

plot(fm, panel = "all", nodesSpacing = "equidistant", colorCluster = TRUE)

plot(fmAll, panel = "tree", statistic = "p-value",

nodesSpacing = "effects", colorCluster = TRUE)

plot(fm, colorCluster = TRUE, panel = "response")

The heatmap on the right shows means of all variables taken into analysis by groups.

plot(fm, colorCluster = TRUE, panel = "response", responsePanel = "profile")

In the above plots colours are connected with the group. The plot on the right shows means rankings for all variables included in the algorithm.

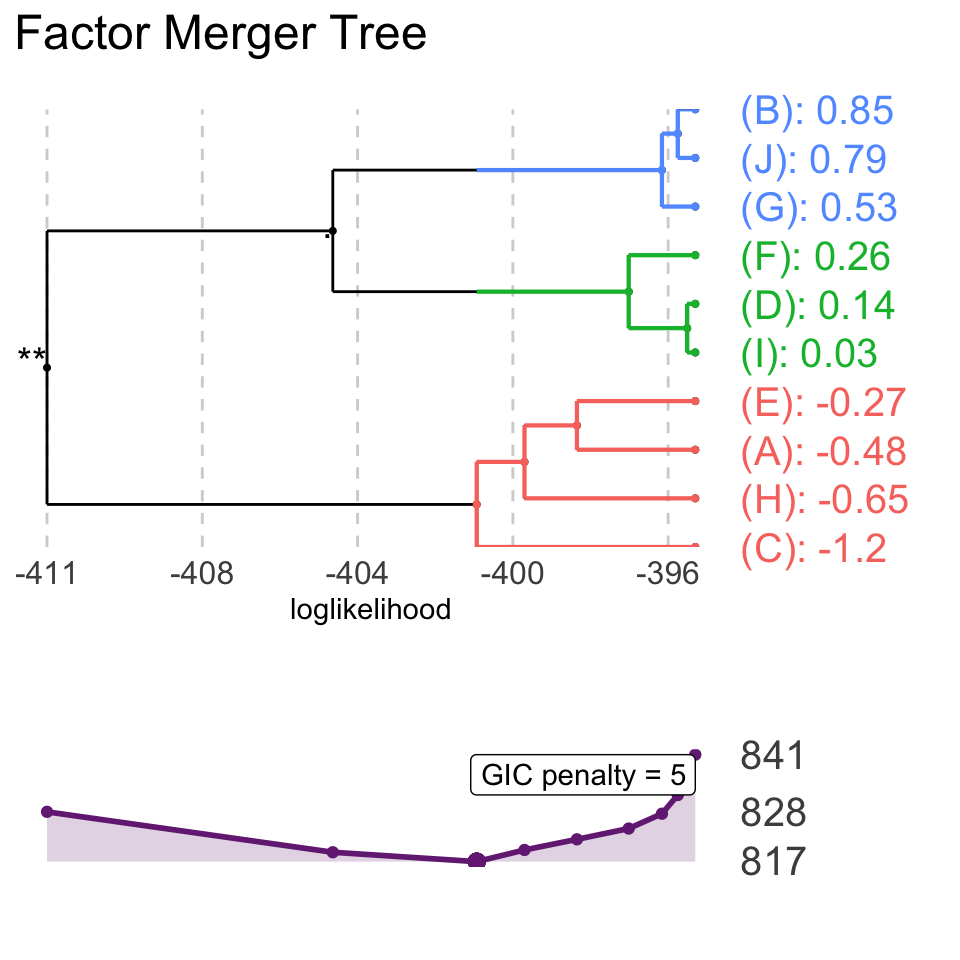

It is also possible to plot GIC together with the merging path plot.

plot(fm, panel = "GIC", penalty = 5)

Model with the lowest GIC is marked.

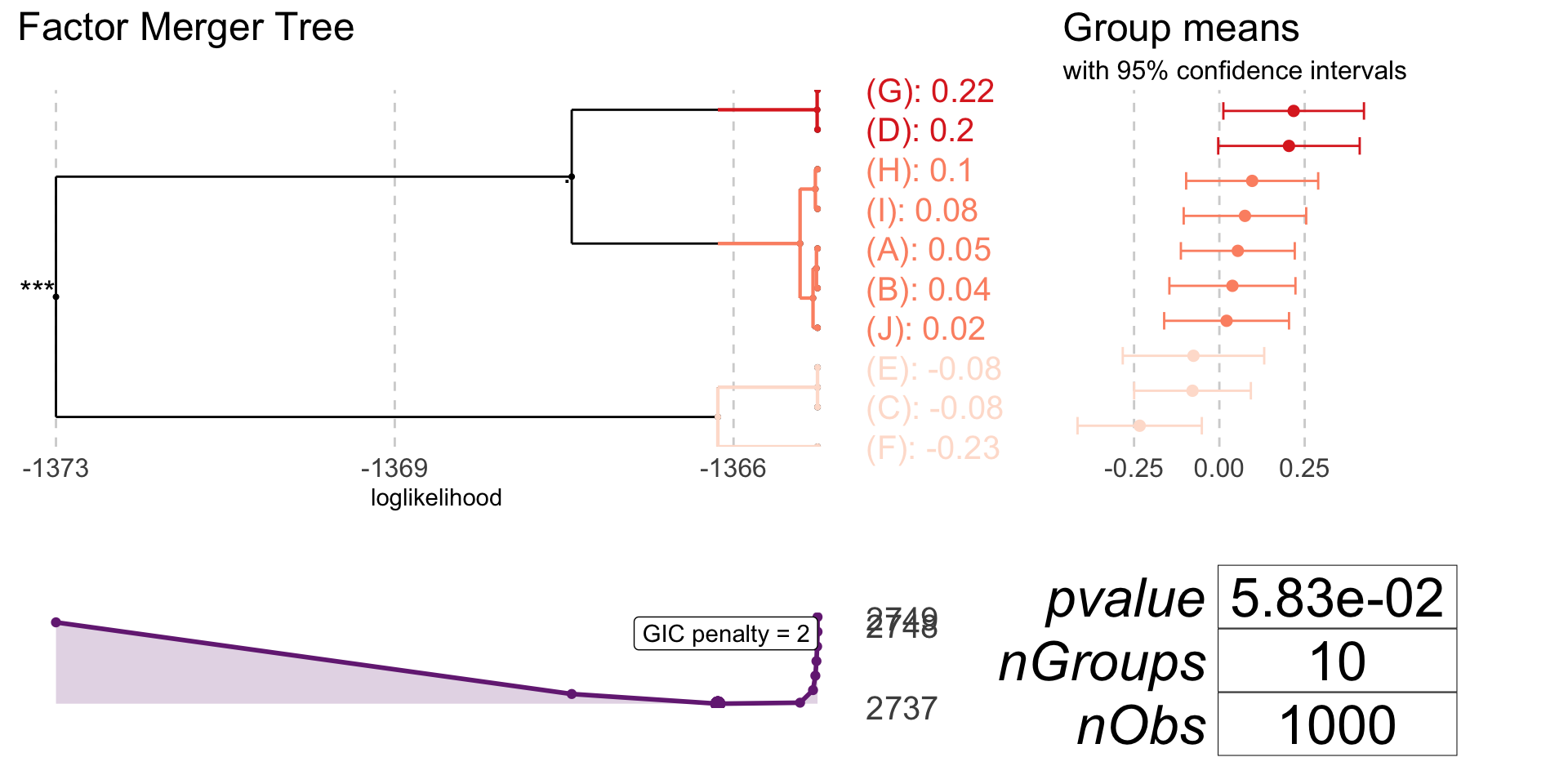

One-dimensional Gaussian model

oneDimRandSample <- generateSample(1000, 10)oneDimFm <- mergeFactors(response = oneDimRandSample$response, factor = oneDimRandSample$factor,

method = "fixed")

mergingHistory(oneDimFm, showStats = TRUE) %>%

kable()| groupA | groupB | model | pvalVsFull | pvalVsPrevious | |

|---|---|---|---|---|---|

| 0 | -1364.605 | 1.0000 | 1.0000 | ||

| 1 | (C) | (E) | -1364.605 | 0.9831 | 0.9831 |

| 2 | (D) | (G) | -1364.610 | 0.9955 | 0.9263 |

| 3 | (B) | (A) | -1364.618 | 0.9990 | 0.9008 |

| 4 | (I) | (H) | -1364.630 | 0.9997 | 0.8751 |

| 5 | (J) | (B)(A) | -1364.657 | 0.9998 | 0.8188 |

| 6 | (J)(B)(A) | (I)(H) | -1364.803 | 0.9989 | 0.5888 |

| 7 | (F) | (C)(E) | -1365.736 | 0.9451 | 0.1731 |

| 8 | (J)(B)(A)(I)(H) | (D)(G) | -1367.393 | 0.6991 | 0.0692 |

| 9 | (F)(C)(E) | (J)(B)(A)(I)(H)(D)(G) | -1373.241 | 0.0463 | 0.0006 |

plot(oneDimFm, palette = "Reds")

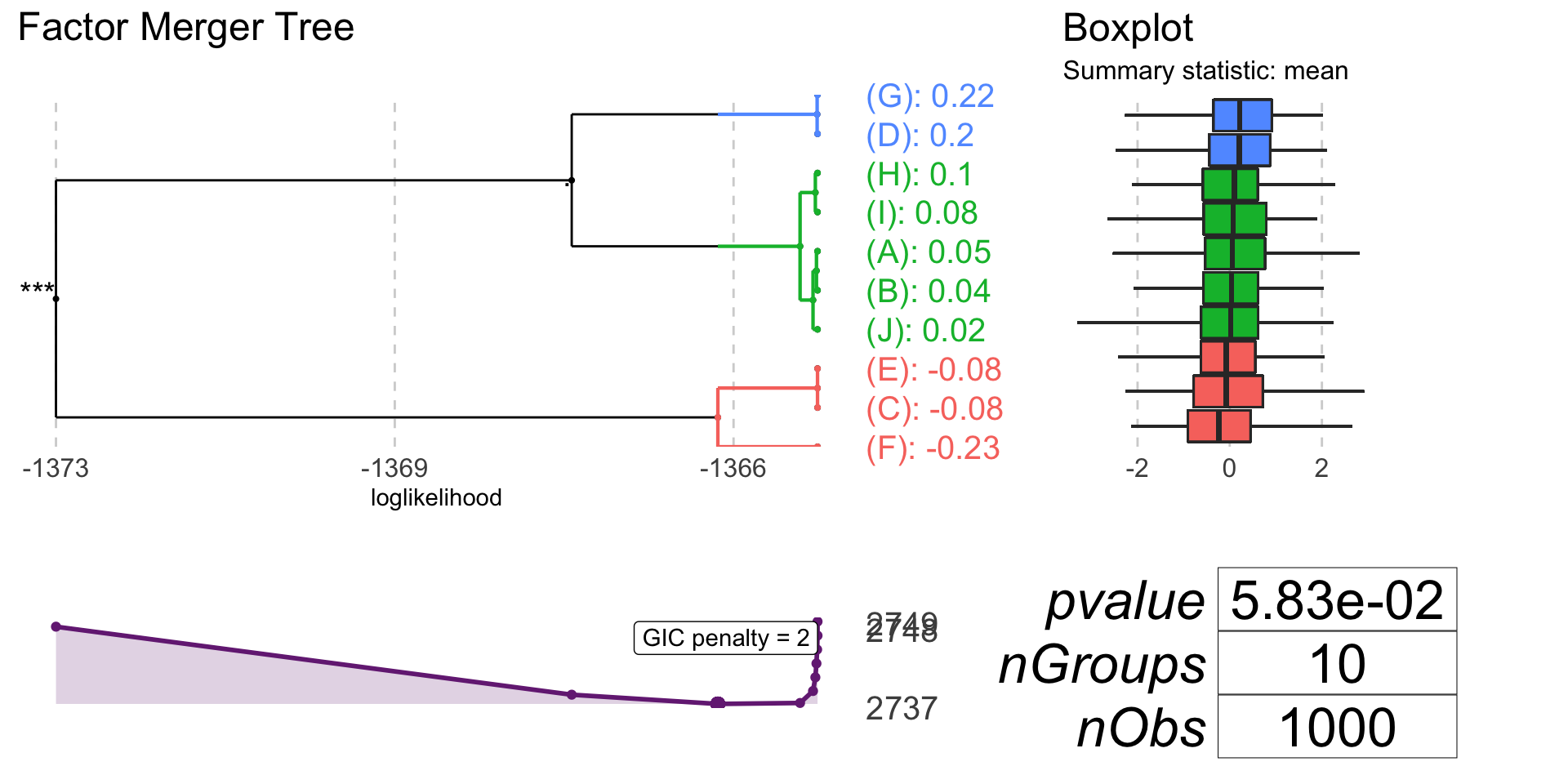

plot(oneDimFm, responsePanel = "boxplot", colorCluster = TRUE)

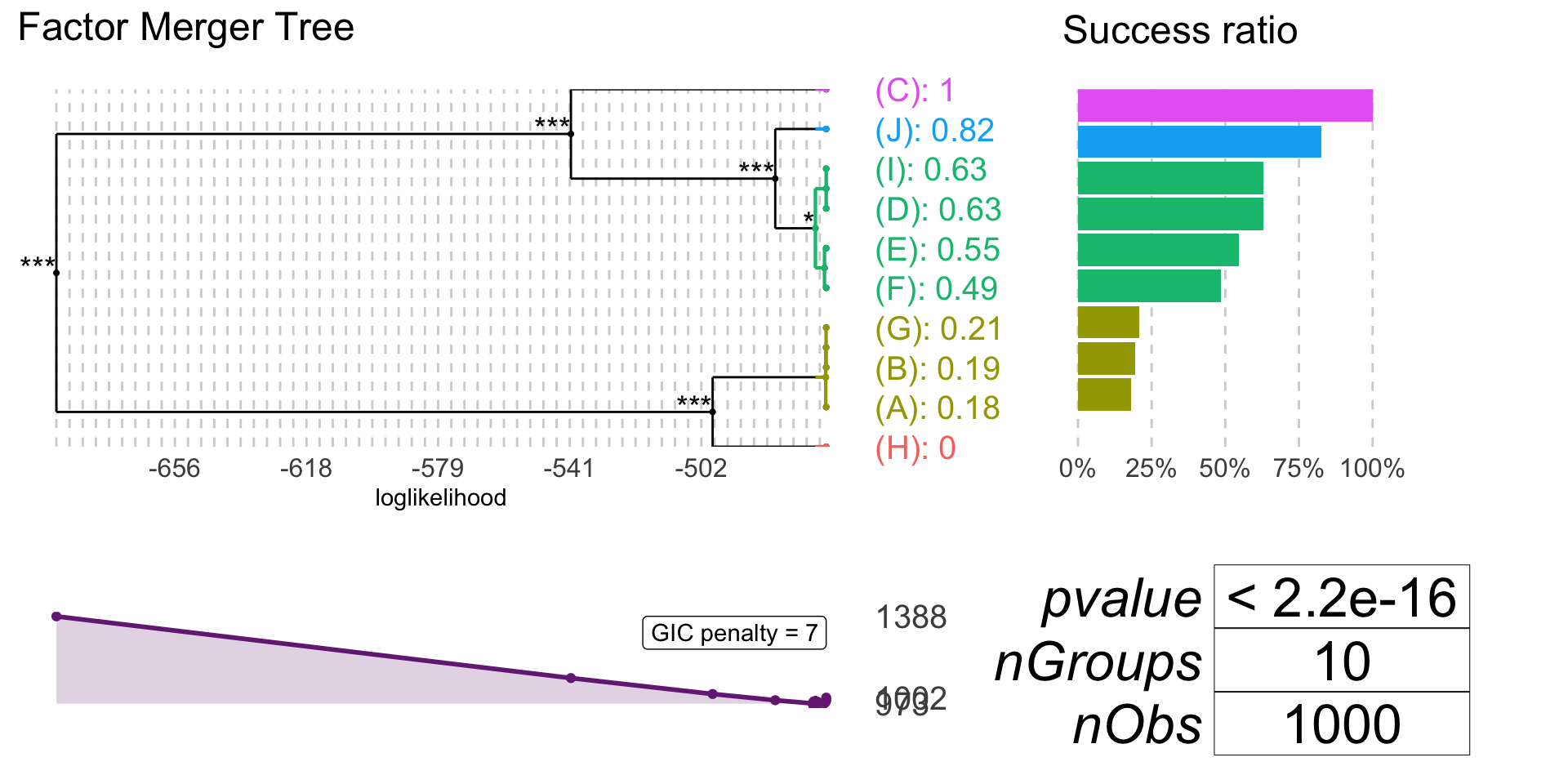

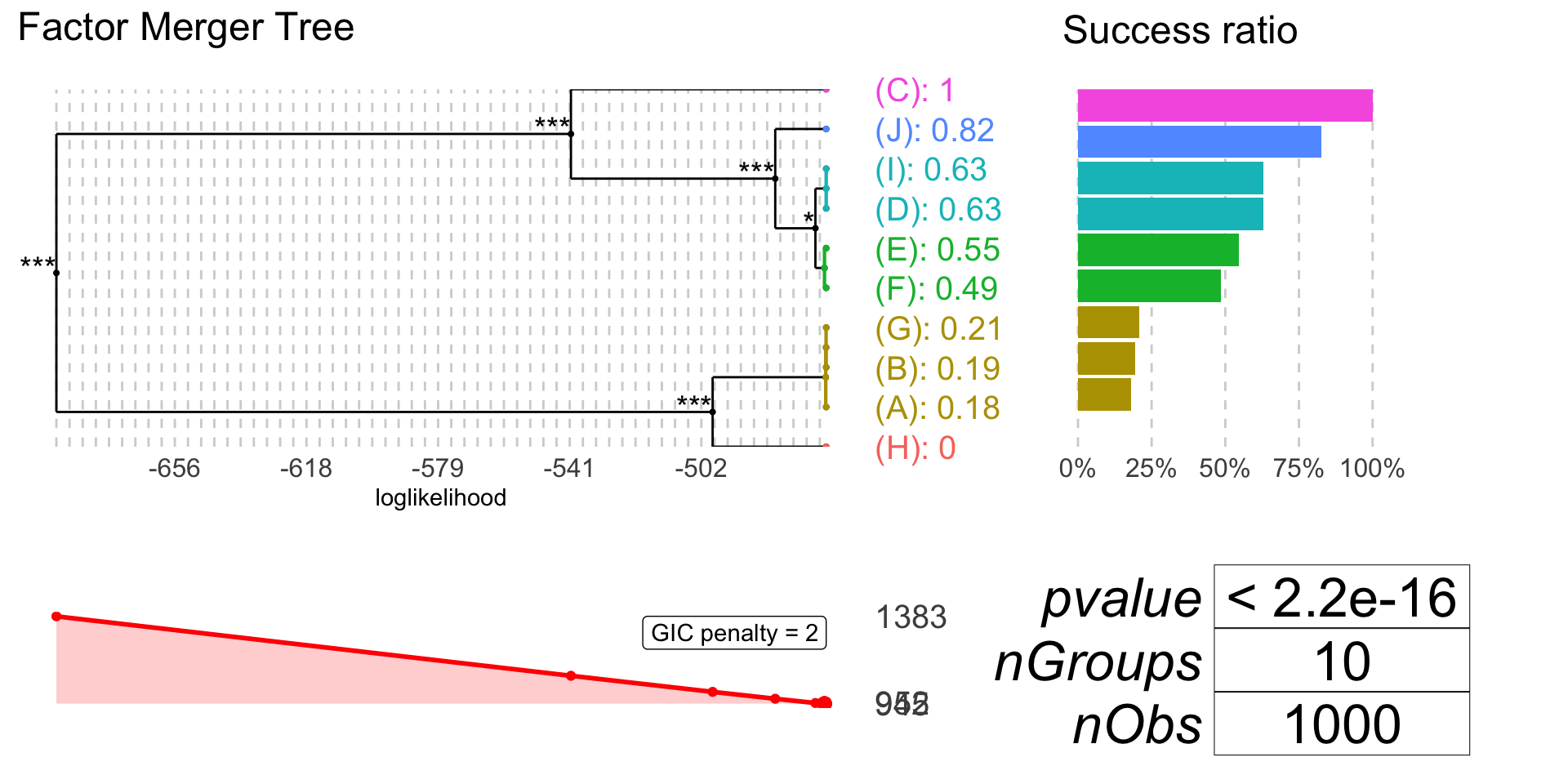

Binomial model

If family = "binomial" response must have to values: 0 and 1 (1 is interpreted as success).

binomRandSample <- generateSample(1000, 10, distr = "binomial")

table(binomRandSample$response, binomRandSample$factor) %>%

kable()| H | A | B | G | F | E | D | I | J | C | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 99 | 78 | 79 | 92 | 54 | 45 | 36 | 36 | 17 | 0 |

| 1 | 0 | 17 | 19 | 24 | 51 | 54 | 61 | 61 | 80 | 97 |

binomFm <- mergeFactors(response = binomRandSample$response,

factor = binomRandSample$factor,

family = "binomial",

method = "fast-adaptive")

mergingHistory(binomFm, showStats = TRUE) %>%

kable()| groupA | groupB | model | pvalVsFull | pvalVsPrevious | |

|---|---|---|---|---|---|

| 0 | -465.8888 | 1.0000 | 1.0000 | ||

| 1 | (D) | (I) | -465.8888 | 1.0000 | 1.0000 |

| 2 | (B) | (G) | -465.9169 | 0.9723 | 0.8127 |

| 3 | (A) | (B)(G) | -466.0197 | 0.9670 | 0.6502 |

| 4 | (F) | (E) | -466.3840 | 0.9113 | 0.3934 |

| 5 | (F)(E) | (D)(I) | -469.0360 | 0.2786 | 0.0213 |

| 6 | (F)(E)(D)(I) | (J) | -480.7588 | 0.0000 | 0.0000 |

| 7 | (H) | (A)(B)(G) | -499.0326 | 0.0000 | 0.0000 |

| 8 | (F)(E)(D)(I)(J) | (C) | -540.3831 | 0.0000 | 0.0000 |

| 9 | (H)(A)(B)(G) | (F)(E)(D)(I)(J)(C) | -690.5529 | 0.0000 | 0.0000 |

plot(binomFm, colorCluster = TRUE, penalty = 7)

plot(binomFm, gicPanelColor = "red")

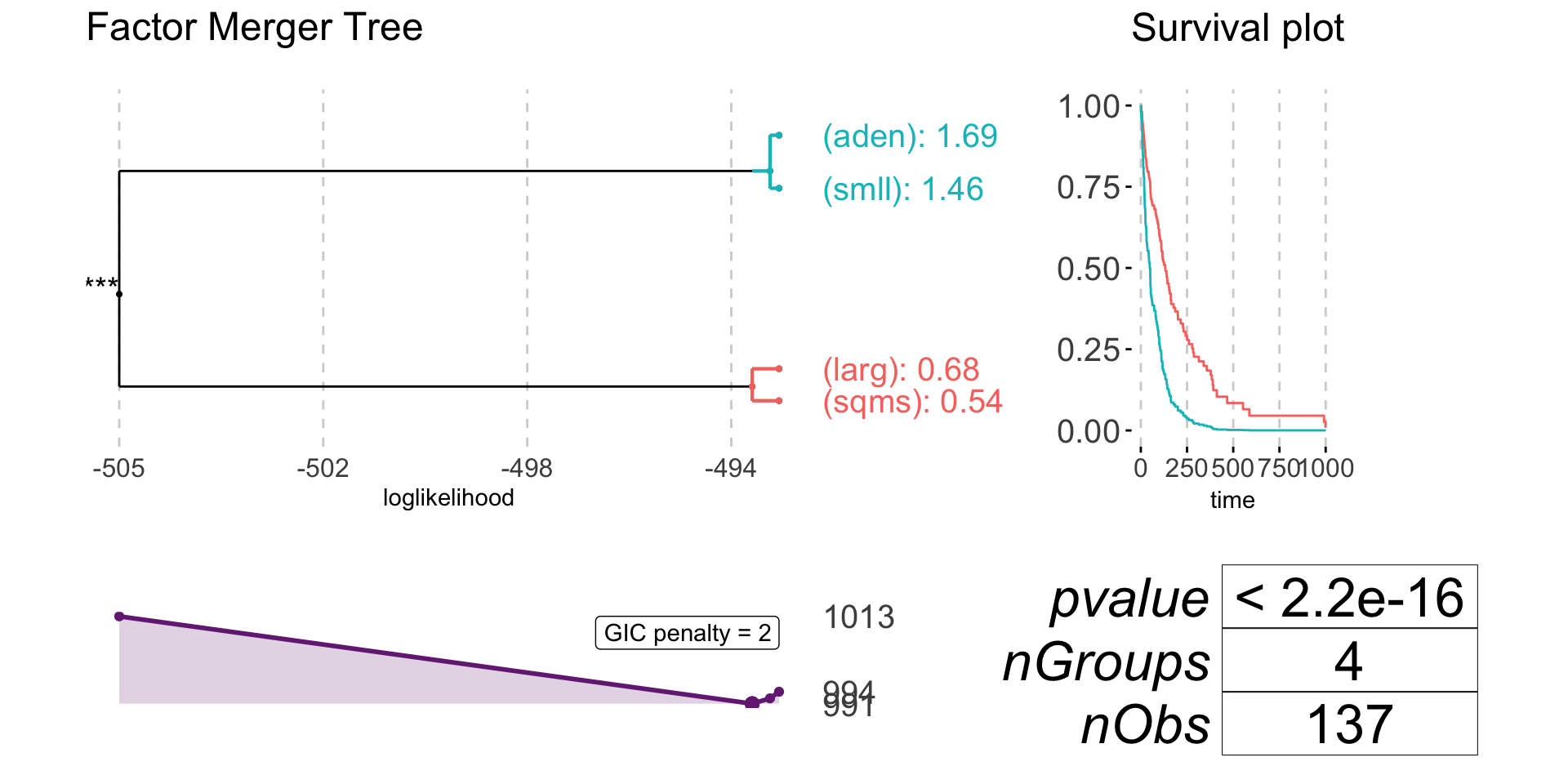

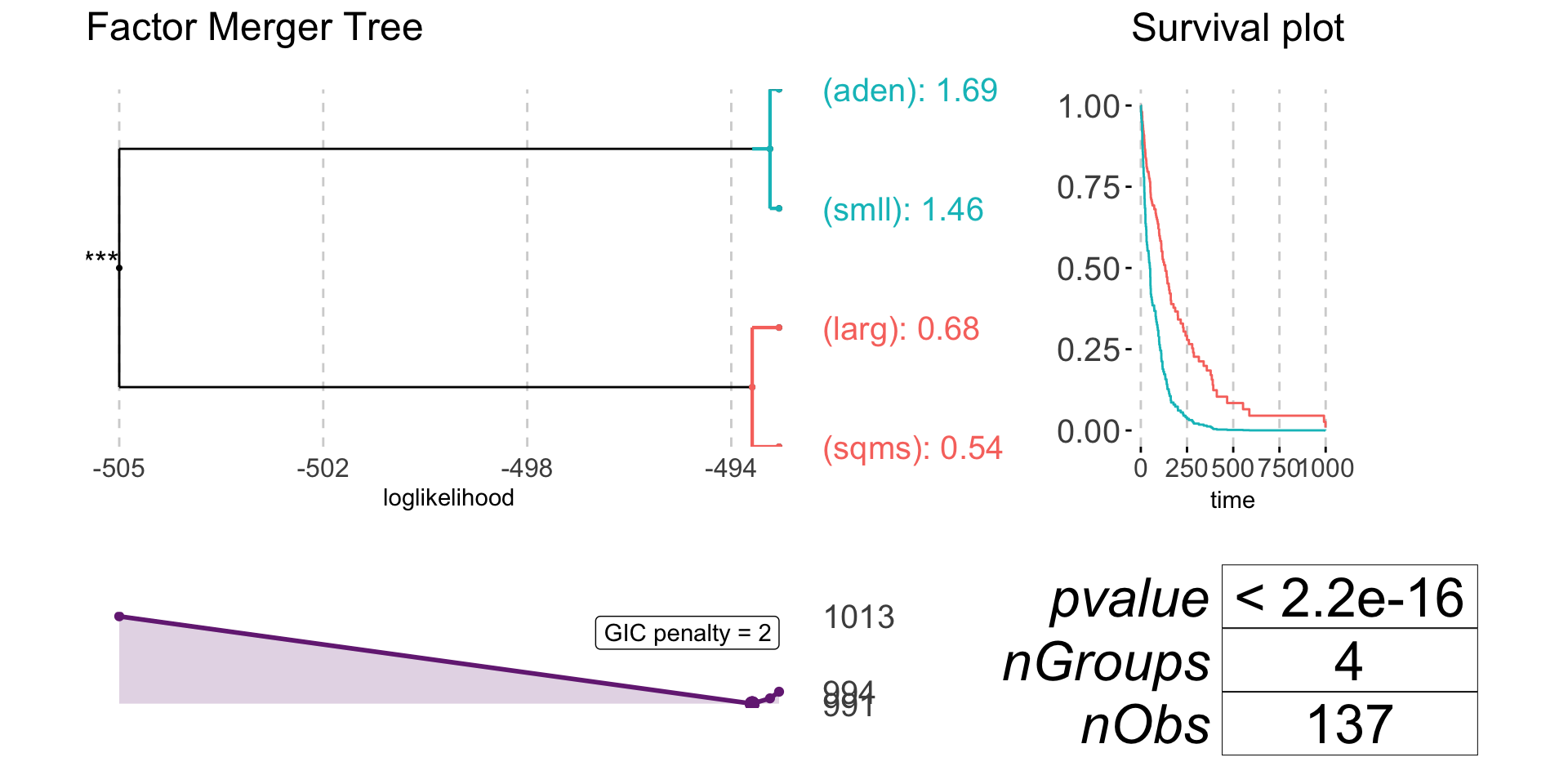

Survival model

If family = "survival" response must be of a class Surv.

library(survival)

data(veteran)

survResponse <- Surv(time = veteran$time,

event = veteran$status)

survivalFm <- mergeFactors(response = survResponse,

factor = veteran$celltype,

family = "survival") mergingHistory(survivalFm, showStats = TRUE) %>%

kable()| groupA | groupB | model | pvalVsFull | pvalVsPrevious | |

|---|---|---|---|---|---|

| 0 | -493.0247 | 1.0000 | 1.0000 | ||

| 1 | (smll) | (aden) | -493.1951 | 0.5594 | 0.5594 |

| 2 | (sqms) | (larg) | -493.5304 | 0.6031 | 0.4128 |

| 3 | (sqms)(larg) | (smll)(aden) | -505.4491 | 0.0000 | 0.0000 |

plot(survivalFm)

plot(survivalFm, nodesSpacing = "effects", colorCluster = TRUE)